Why AI Search Is a Black Box (and Why That's Not New)

The uncomfortable truth: search was always a black box. The only thing that changed is that it stopped pretending to be clear.

“AI search is a black box” is the most common complaint right now.

It usually sounds technical, but it’s not. It’s emotional. What people really mean is: I don’t know which levers still work, and I don’t like that.

That discomfort is understandable. Rankings disappeared. Attribution is fuzzy. Answers arrive fully formed, with no visible machinery behind them. Compared to the old web, this feels like a loss of transparency.

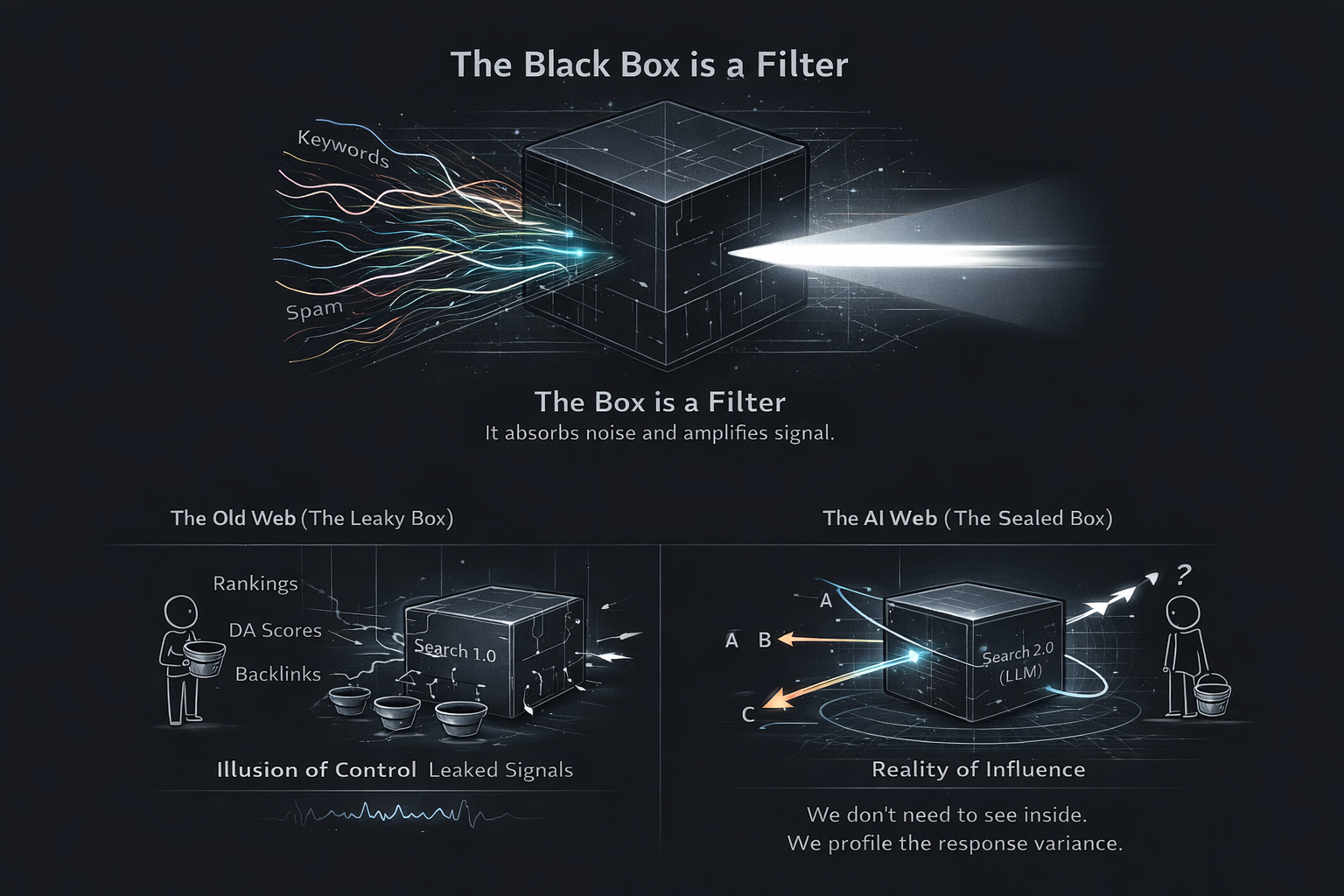

But here’s the uncomfortable truth: search was always a black box.

The only thing that changed is that the box stopped pretending to be clear.

The myth of transparent search

Google never showed you how it worked. It showed you outputs.

PageRank, relevance scoring, quality signals, spam detection—all of that was hidden. What made people feel in control was not transparency, but repeatability. You could run an experiment, see a ranking move, and infer causality. Often incorrectly, but convincingly enough.

That illusion scaled into an industry.

SEO felt legible because the system leaked signals through its interface. Rankings updated slowly. Pages rose and fell in ways that could be graphed. You didn’t understand the engine, but you could map its behavior well enough to act.

That was never the same thing as transparency.

AI search removes those leaks.

When an LLM answers a question, it doesn’t expose intermediate steps. It doesn’t show a ranked list. It doesn’t tell you which sentence came from which source. It presents a compressed outcome and moves on.

People interpret that as opacity increasing. In reality, opacity stayed constant. What disappeared was instrumentation.

Black boxes are a scaling problem, not an AI problem

Any system that operates at sufficient scale becomes opaque.

Recommendation systems became black boxes the moment they outgrew human intuition. Credit scoring models were black boxes long before machine learning became fashionable. Even markets are black boxes once enough agents interact.

AI search belongs to this category.

Once you move from deterministic rules to probabilistic synthesis, explanation becomes expensive and incomplete. You can either have performance or interpretability at scale. You rarely get both.

So the question isn’t “why is AI search a black box?”

The real question is “why did we expect it not to be?”

Explanation is not control

A subtle mistake people make is equating explanation with agency.

Even in classic SEO, knowing why something ranked didn’t mean you could force it to rank again. It meant you could make educated guesses. Those guesses worked often enough to feel like control.

AI search breaks that feeling because the feedback loops are slower, the signals are more diffuse, and the outputs are aggregated rather than listed.

You don’t get clean cause-and-effect. You get probabilistic influence.

That’s not new. It’s just more honest.

How engineers actually deal with black boxes

When systems stop being interpretable, engineers don’t try to pry them open. That impulse belongs to auditors and philosophers, not people who need systems to work.

They switch methods.

Instead of asking “what’s inside?”, they ask a more useful question: how does this system behave, consistently, under different conditions?

This is not guesswork. It’s a form of applied empiricism.

In practice, inputs are varied deliberately and outputs are observed over time, not once. Patterns are tested across prompts, contexts, and phrasings. Explanations are checked for whether they survive paraphrasing, summarization, and recombination. Sources are examined for which ones are repeatedly drawn from and which are silently ignored.

What this builds is not an explanation of the model. It builds a behavioral profile.

That profile reveals what kinds of language the system treats as stable, which framings it reproduces versus rewrites, where it becomes cautious or evasive, and what it treats as safe knowledge rather than speculative claims.

This is how engineers work with compilers, networks, distributed systems, and recommendation engines. They don’t need perfect interpretability. They need predictability at the margins.

AI search is no different.

You cannot see the weights or trace every decision. But you can learn, with surprising reliability, what the system tends to trust and what it refuses to carry forward.

That is what mapping a black box actually means. Not cracking it. Not gaming it. Profiling its behavior until strategy becomes possible again.

What the AI search black box actually optimizes for

Despite the mystique, LLM-based search systems are conservative.

They prefer explanations that align with many sources, language that remains consistent across contexts, definitions that survive paraphrasing, and ideas that remain stable over time.

They avoid novelty without reinforcement, idiosyncratic framing, brittle claims, and explanations that only work once.

This is why some content is quietly absorbed while other content never appears, no matter how optimized it looks.

The box isn’t random. It’s cautious.

The real loss: legibility, not influence

What founders are reacting to isn’t loss of visibility. It’s loss of measurement.

You can no longer point to a ranking and say, “That’s why revenue moved.” Influence now happens upstream, inside the answer layer, before users make explicit choices.

That breaks dashboards. It breaks reporting rituals. It breaks weekly SEO updates.

But it doesn’t break reality.

Your ideas either shape answers or they don’t. The system either reaches for your framing or it ignores it. That is happening whether you can see it or not.

Strategy in an opaque system

Opacity doesn’t make strategy impossible. It changes what strategy looks like.

Instead of optimizing for explicit signals, you optimize for conceptual clarity, linguistic consistency, repeatable explanations, and alignment with broader consensus.

You aim to become a safe source for the system to draw from.

Black boxes don’t reward clever hacks. They reward stability.

The calm conclusion

AI search is a black box.

So was Google.

So is every system that matters at scale.

What’s different now is that the box stopped flattering us with feedback.

If your strategy depended on seeing every lever, it was fragile to begin with.

If your strategy is to produce explanations that hold together under compression, opacity doesn’t hurt you. It filters for you.

The black box isn’t the enemy.

The illusion of control was.